• SECTION 3 •

ARTIFICIAL INTELLIGENCE

Here’s where things get murky and even harder to trace. The world of AI is changing SO fast – in fact, Nanobanana hadn’t even been publicly released when I started preparing for this talk. Here’s also where I have the least amount of knowledge. While I’ve been trying to experiment with AI on a daily basis both in my personal life and at the studio, I’ve also had a hard time finding where my morals lie.

Energy Consumption

I understand that AI requires HUGE amounts of data and servers, which of course, requires huge amounts of energy – exponentially more than the internet.

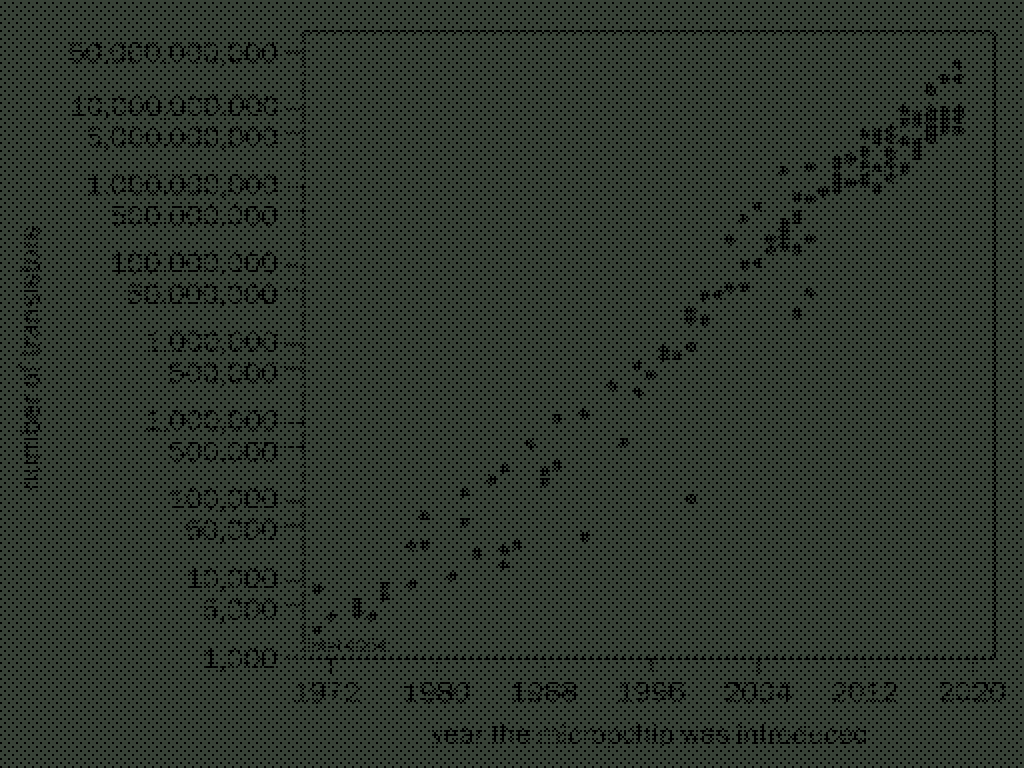

Look at Moore’s Law – it stated that as transistors technology got better with time, there would be an exponential increase in usage and decrease in cost. This “law” became a self fulfilling prophecy with tech companies striving to achieve the advancements promised in this graph.

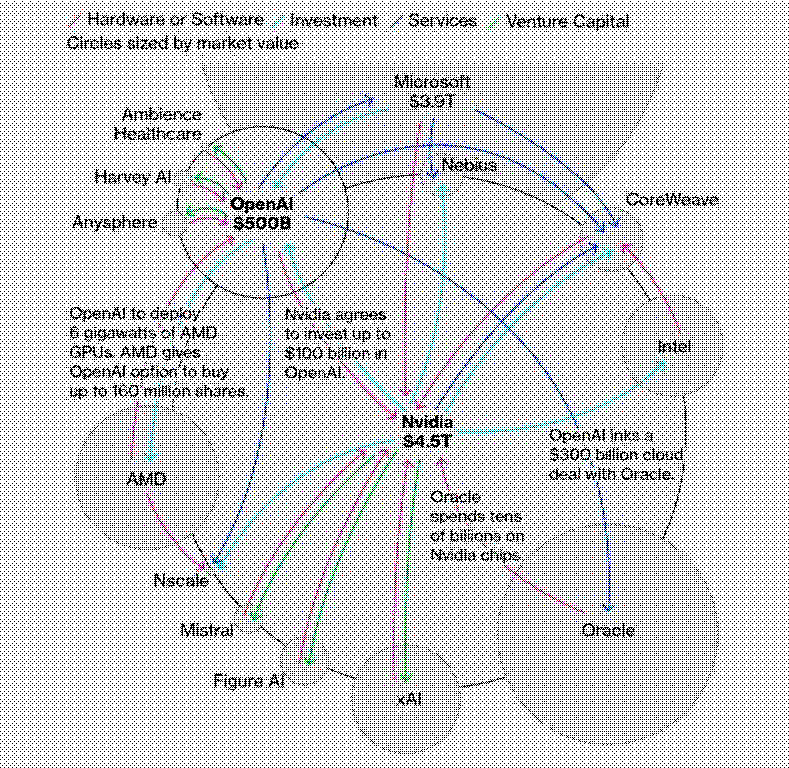

I can see something similar happening with NVidia too now and their stock prices shooting up. As well as in the closed loop of monetary resources spread across the inner circle of AI companies.

There is no conclusive data yet.

We haven’t received conclusive data yet. But I still err on the side of caution.

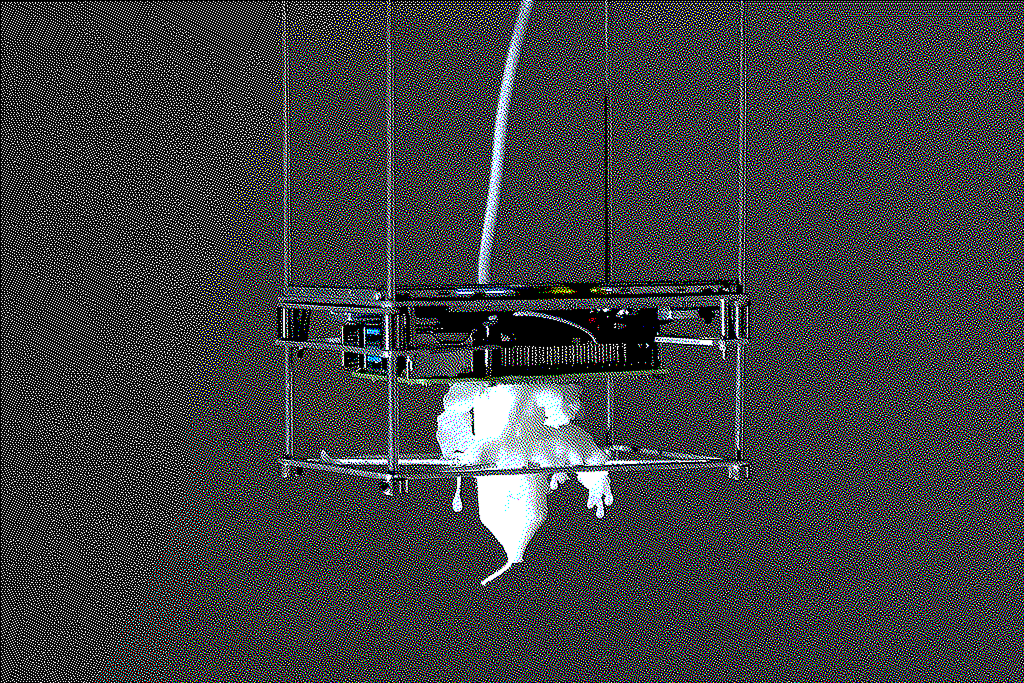

To Heat a Candle with the Warmth of You

Material realities of (AI) computation

Julia Vollmer

Every machine-generated thought consumes power and produces heat: a silent, residual trace of its activity. While we cannot see energy directly, its byproduct heat offers a way to feel and sense the invisible processes shaping our world. On the consumer side, the underlying material realities of these tools remain largely hidden.

Julia Vollmer did this really cool experiment. The amount of heat generated by a few simple prompts was enough to melt a candle. And that’s just the end result of a single AI model – it doesn’t even begin to account for all the energy consumed in all the steps before ChatGPT spits out it’s final answer.

AlphaGo

In 2016, DeepMind built AlphaGo, an AI that played Go. At the time, Go was considered the most intuitive, most creative, most human board game to exist. AlphaGo ended up beating Lee Sedol, who is considered the best player of the decade. At first, it made a move that was considered incredibly creative, incredibly unusual. Everyone was shell-shocked.

AI made him more creative

But then what happened next is Lee came up with moves that he personally would never have come up with. He later admitted that AI helped him step up his game because it challenged him to be more creative. It played like no human had ever played, and that forced him to think in new ways he hadn’t done before. And he never lost a game after that to any human.

How can we frame AI?

Framing the kind of work we do using AI is going to be very important for us (especially for our/your generation of designers). AI can challenge us as designers to think differently and broaden our perceptions of wicked problems. At the same time, it can take “power” away from us if we don’t use it right. It’s why everyone is so worried about AI taking their jobs (but more on that later).

Slop factories with the wrong priorities

At its worst, AI can make us into slop factories with the wrong priorities- not reading what others have generated, generating better looking fingers on NanoBanana and more and more AI personalized productivity apps.

Maybe AI can solve the AI crisis

But at its best, maybe AI can see patterns we humans can’t yet – finding creative solutions to the climate crisis or even designing systems for Artificial Intelligence itself to be more eco-friendly or carbon neutral.

The right tool for the job

My friend Meg has this metaphor about AI being another “tool for the job”. A painter wouldn’t use a fine brush to paint a mural, in the same way we shouldn’t look to AI to do a job it isn’t suited for – like writing novels. Or, Frank Chimero has this metaphor about wielding AI as an instrument – an extension of ourselves rather than a replacement for it.

“If men learn this, it will implant forgetfulness in their souls…”

Socrates warned Plato against writing because people wouldn’t use their memories and that would “create forgetfulness in the learners’ souls.” Now, with my mild dyslexia, I kinda wish he’d won that battle. Obviously now it seems shortsighted to argue against writing, writing is a technology we should continue to embrace – but we only know that in hindsight.

It’s hard to separate change aversion from a well-founded fear of things that might be bad for people and society.

When you are living through disruption, it’s hard to separate change aversion from a well-founded fear of things that might be bad for people and society. And even more challenging, these inventions can simultaneously be both good and bad; it’s all about how they are deployed, used, and managed.

Is it worth investing millions of dollars into getting those fingers right?

Early adopters are going to set the standards for how we use the technology – if we aren’t thoughtful about it now, we will build on that thoughtlessness with time. Is it worth investing millions of dollars into getting those fingers right?

I don’t want us to fall behind.

So, as an “early adopter”, I don’t want to fall behind – I don’t want to shun AI because I’m scared of it, but rather want to harness it. And maybe there’s a way to do good with it too.

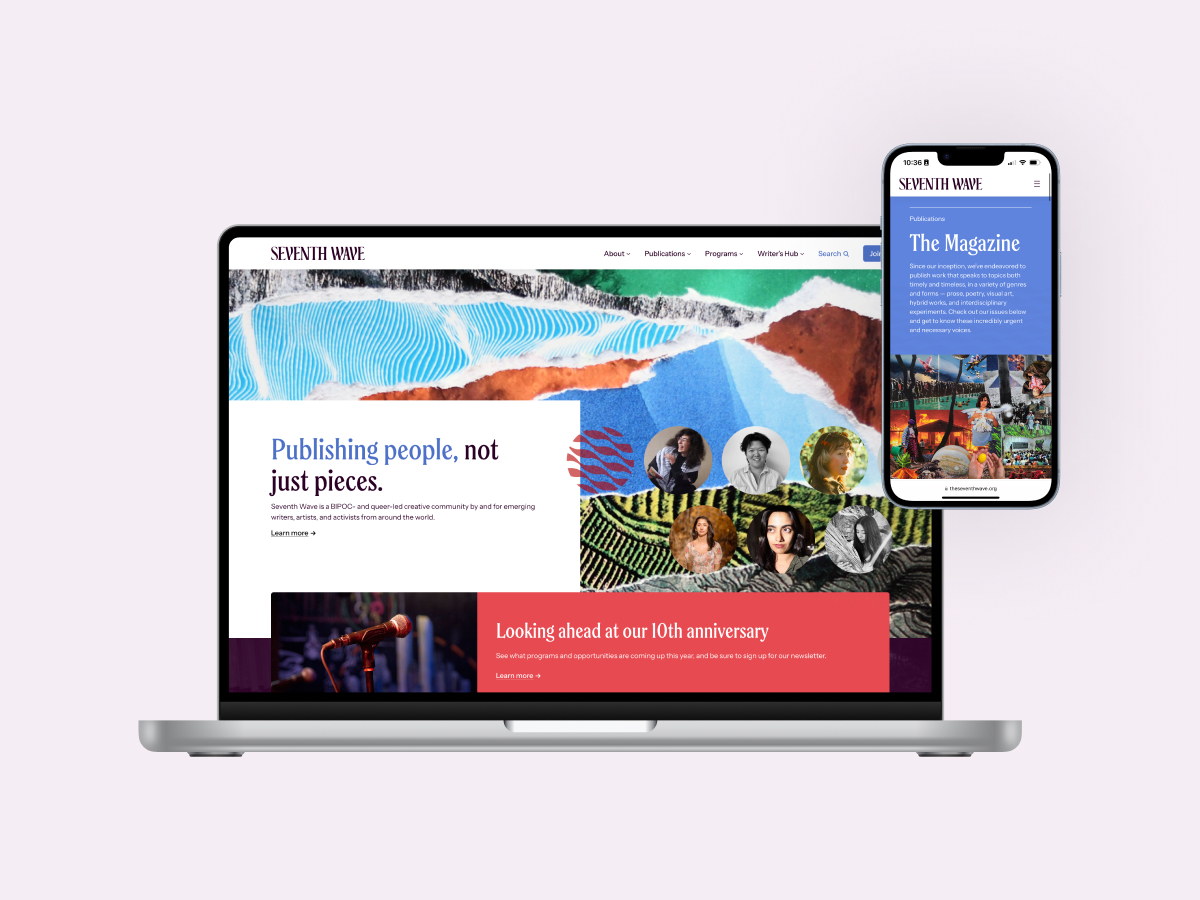

Seventh Wave

At Tandem, our focus has been on giving non-profits and other clients the right tools to save under-resourced workers hours of time and energy. And AI fits right in as a way to level the playing field.

I can see plenty of ways AI could have fit into our workflow when we were working on Seventh Wave at the studio, all through a spatial metaphor. We could’ve used AI to run complex migrations or do a lot of the grunt work, or we could’ve asked AI to direct us in reorganizing content or doing a first pass at it before us. But perhaps the most beneficial way wouldn’t have been to direct it or have it direct us – but rather collaborate with it, making design decisions alongside us to actually streamline the website for a better overall experience.

Designing sustainably is about designing with parameters.

Designing with AI is also about designing with parameters.

Solving the AI climate crisis seems very similar to solving the climate crisis in general. While we need policies and large scale changes to be made, I think we can still, as individuals, find how and where we use AI and where we don’t. We just learnt that designing sustainably is about designing with parameters (limited fonts, colors, etc,) Designing using AI is also about designing with parameters.

A close friend of mine refuses to use AI to generate images or video – for its large amount of data and energy consumption, and also because it doesn’t fit into her design ethos. As a creative, generative AI doesn’t feel like the best use of her time and it uses over 3x the energy.

Parameters for play

I think I fall into a similar category. I’ve been setting myself some “parameters for play” while I experiment with it in my workflow.

1. Use AI to do less

I’ve been using AI to actually do less. I’ve been using AI to write faster, more efficient code that I probably wouldn’t be able to do myself. I’m using AI to quickly bridge the gap between designer and developer and while I don’t have all the facts in place maybe it’s actually more efficient.

2. Reduce, Reuse, and Recycle

Another parameter of play for me is to “Reduce, Reuse and Recycle”. It’s a good north-star to use when thinking about our consumption in real life – so why not apply it to the digital as well? I try to reduce AI consumption when possible, reuse prompts or information wherever possible instead of saying “that didn’t work” a million times, and

I try to recycle answers – take something and make it better or repurpose it (especially if I know I’ve looked something up before or asked a certain question before).

3. Feel the burn

I don’t use AI to think or as a crutch. It’s like a workout, if you don’t feel it burn, you’re not really working out, right? I think that cognitive work is the same way. So while I am really interested in using AI, I think there is a sense where, if this is too easy, I’m probably not thinking or learning.

What are your parameters for play?

By the way, these are my parameters of play, they don’t have to be yours. They can be as simple as limiting yourself to 5 prompts a day, or only asking a question you know you can’t google and get the same result (then again, google also uses AI now so maybe we’re all screwed). What are your parameters for AI play?